Tweets, Runs and the Minnesota Vikings

David Bradnum, Christopher Lovell, Pedro Santos and Nick Vaughan.

Bank of England August 18 2015

Could Twitter help predict a bank run? That was the question a group of us were tasked with answering in the run up to the Scottish independence referendum. To investigate, we built an experimental system in just a few days, to collect and analyse tweets in real time. In the end, fears of a bank run were not realised, so the jury is still out on Twitter. But even so we learnt a lot about social media analysis (and a little about American Football) and argue that text analytics more generally has much potential for central banks.

Background

On 18th September 2014, Scottish residents went to the polls to answer the question “Should Scotland be an independent country?” At the time, concerns were raised about the potential impact a ‘yes’ result might have on the UK financial system. Indeed, the Governor highlighted that “uncertainty about the currency arrangements could raise financial stability issues.”

The group pioneered an innovative approach using social media as a unique lens through which to observe developments in real-time. We analysed traffic on the Twitter micro-blogging platform in the run-up to the vote, searching for tweets including terms or phrases that may have indicated depositors preparing to withdraw money from Scottish financial institutions. The aim was to monitor the volume of traffic matching these search terms, and provide an early warning system in the event of a spike.

Why social media?

Every second, on average, around 6,000 tweets are published worldwide: that’s about 350,000 per minute, 500 million per day and 200 billion tweets per year. Clearly, Twitter users do not form a representative sample of the general population (illustrated by this survey, for instance); and there are obvious challenges in interpreting 140-character snippets of text or extracting a signal from the plentiful noise. But even so, there are some advantages to analysing this huge amount of data compared with traditional news outlets or financial market indicators.

A key differentiator from traditional media is timeliness: any individual can tweet from almost any location at any time. And those tweets can be captured and analysed almost immediately, too. The flow of data continues 24/7, allowing you to monitor sentiment after markets close and before they reopen.

Also, when looking at aggregated data sources, the cause of trends and patterns is often unclear. Conversely, free text typically includes explicit reference to named entities, and emotions related to these. In the case of social media especially, you often find links to additional information (e.g. external news articles, or other influential individuals and organisations) that can provide valuable context and understanding.

Finally, some authors choose to disclose geographic information about their location. Given sufficient data, this means you can build more granular views at a regional level, rather than having a single index for the entire country.

Why this approach?

Our approach to filtering tweets was relatively narrow. We chose to search for a range of terms indicating financial stability concerns or referencing specific institutions, together with some link to the referendum or Scotland itself. Clearly, we could have widened the search criteria and gathered a substantially larger amount of data on a wider range of topics. This would almost certainly have captured more relevant information, but also a much higher volume of noise, and potentially a series of false alarms requiring manual intervention to investigate. Instead, we recognised that there was no need to capture every single relevant tweet, and felt confident that any material change would still be visible swiftly via our filters.

What happened?

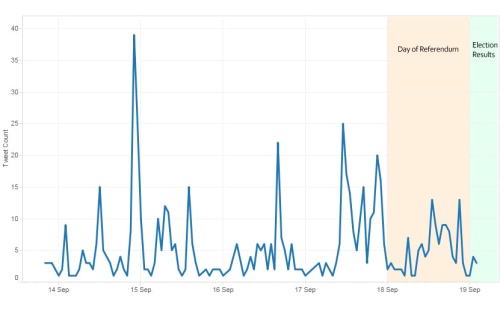

The chart below shows the number of tweets collected, as an hourly time series. Given the tight filters we applied, the volumes are actually rather small – but in fact, this was helpful in allowing us to diagnose any remaining peculiarities.

Tweet volume time series

Source: Twitter, Datasift, Bank of England calculations

For example, our attention was drawn to a spike in the early hours of Monday 15th September. However, we quickly realised that this was driven by American Football, rather than events closer to home!

On closer examination, it transpired that we were looking at a series of tweets and retweets involving the Minnesota Vikings. This had been captured because they combined the term “run” and the abbreviation “RBs”. But in this context, the reference was to Running Backs and not the Royal Bank of Scotland! To avoid this pitfall, the search terms were subtly changed to avoid that particular pattern of upper and lower case characters.

Tweets were monitored for a full week, with particular attention on the night of the referendum vote. In the end, there was little traffic, as the ‘no’ result became increasingly apparent throughout the morning. Even so, this was a valuable exercise, building capabilities and knowledge to serve as a foundation for future projects.

In particular, the project presented a number of IT challenges. Our search terms needed to be securely transmitted to the data provider; if it was revealed that we were interested in particular firms this could cause the very run we were concerned about. We also needed to stream, store and query these data in real-time, and achieved this using novel technologies for the Bank, opening up new avenues for analysis and research.

What next?

Although in this case we employed a very specific approach to address a particular challenge, there are broader opportunities to be explored. Indeed, greater volumes of Twitter data can reveal broader patterns of human activity and their impact on the economy. As an example, Llorente et al. analyse 19 million geo-tagged tweets from 340 different Spanish regions, searching for indicators of unemployment. Even though the proportion of tweets that are geo-tagged is very small (around 1%), they are able to estimate regional unemployment from just a few social media markers, such as mobility patterns and communication styles.

A similar paper from Antenucci et al. creates indexes for job loss and job search activity, by searching for tweets containing phrases such as “laid off”. Both of these papers’ indices could be calculated in real-time, far in advance of official statistics and subsequent revisions. However, as people’s behaviour changes and language evolves, such models may lose their predictive power; the latter model appears to be a case in point.

Twitter is certainly not the only potential source of text information either. Others (e.g. blogs like this one!) are more verbose, and that volume of content opens the door to more advanced techniques.

For example, Baker, Bloom and Davis estimate economic policy uncertainty by counting the number of articles in major US and European newspapers containing relevant words. More sophisticated techniques have been used to analyse central banks’ own communications too. For instance, Hansen, McMahon and Prat use probabilistic topic models to study the effects of greater transparency on US Federal Reserve Open Market Committee meetings. AndVallès and Schonhardt-Bailey analyse Monetary Policy Committee minutes and members’ speeches to understand whether central banks are communicating a consistent message through various channels.

Conclusion

Social media provides a rich vein from which to mine information, and text data more generally can reveal trends in people’s opinions and sentiment on specific topics or events. The Scottish Referendum gave us a great opportunity to do this in a time-critical context, and provided valuable live insights.

Kevin Warsh, commenting on transparency and the Monetary Policy Committee, remarked that while “studies seeking to make sense of millions of spoken words” are “daunting and imperfect”, text mining has “meaningfully advanced our understanding” of central banks. And as this post illustrates, central banks are themselves adopting these tools and techniques to address a wide range ofpotential applications across central banking, and build more agile and wide-ranging data analysis capabilities for the future.

David Bradnum, Christopher Lovell & Pedro Santos work in the Bank’s Advanced Analytics Division, and Nick Vaughan works in the Bank’s IT Build & Maintain Division.

Bank Underground is a blog for Bank of England staff to share views that challenge – or support – prevailing policy orthodoxies. The views expressed here are those of the authors, and are not necessarily those of the Bank of England, or its policy committees.

If you want to get in touch, please email us at bankunderground@bankofengland.co.uk